Introduction

Artificial intelligence (AI) is gaining significant control over our daily lives. It now has a presence in our daily work or personal life. The next few generations will mostly depend on Artificial intelligence because it has the potential to revolutionize the industry. We can see some examples of revolutionary AI like Chat Gpt, Midjourney, and Dall-e. According to PwC, AI will contribute $15.7 billion to the world economy by 2030. However, some problems in Artificial Intelligence require continuous improvement and innovation.

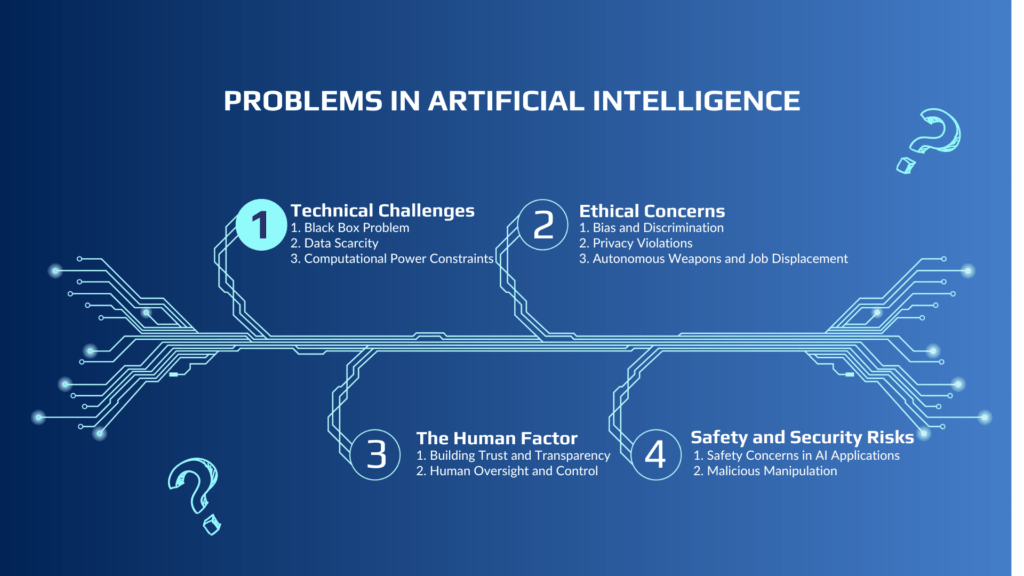

This article will discuss some of the main problems in artificial intelligence in several categories. We will categorize issues into four categories: technical challenges, ethical concerns, safety and security risks, and the human factor, and thoroughly investigate each problem. Understanding these issues can enhance our appreciation for AI technology’s current state and identify areas for improvement. As AI evolves into a more refined and autonomous technology, exploring its fascinating world and challenges is essential.

Technical Challenges:

Black Box Problem:

The black box problem in AI refers to the lack of transparency and understandability in the decision-making process of algorithms. This opacity prevents us from fully comprehending how AI systems arrive at their judgments or conclusions, making it difficult to trust and have confidence in these systems. The lack of transparency hinders our ability to interrogate the inner workings of AI algorithms, leading to skepticism around AI’s fairness, accountability, and reliability.

One example of the black box problem is automated decision-making processes, such as loan approvals or job application screenings. If an algorithm makes discriminatory or unfair decisions due to biased training data or hidden variables, it can perpetuate existing inequalities and discrimination without explanation.

Another example is healthcare, where AI systems are used for diagnosis or treatment recommendations. Addressing the black box problem is crucial for fostering trust in AI systems. There is a current work to create AI models that are capable of being explained, allowing for a better understanding of the decision-making processes. This is done to ensure that judgments are fair and well-informed while also establishing trust among users and stakeholders.

Data Scarcity:

In the world of AI, the old saying “garbage in, garbage out” holds true. Large, high-quality datasets are important for training AI models effectively. These datasets serve as the basis on which AI algorithms learn patterns and make predictions.

Accessing and curating such datasets can be a challenging task, especially in niche domains. Finding relevant data that covers a wide range of scenarios is often difficult due to limited availability and accessibility. Also, making sure the data is correct and of high quality takes a lot of work and resources.

Not having enough data is a big problem for AI development in many fields and uses. For example, it can be hard to get a wide range of complete medical datasets in healthcare because of privacy laws and the fact that institutions don’t share data easily. In the same way, when working on self-driving cars, it can be hard to get real-world driving data from different places when those places are hard to get to.

To solve this issue, we need to think of new ways to use data, like data synthesis or transfer learning, which adapts existing datasets to new areas. Working together with other groups can also make it easier to share data while still following privacy rules.

AI development is really hard in many fields and uses because there isn’t enough data. To make AI technology better, we need to come up with new ways to access and organize large, high-quality datasets.

Computational Power Constraints:

When it comes to training and deploying complex AI models, computational resources play a crucial role. The process of training these models requires significant computing power to crunch large amounts of data and optimize the intricate algorithms at play.

However, limitations imposed by hardware capabilities and energy consumption can pose challenges in this area. Not all systems have access to high-end GPUs or specialized hardware that is ideal for AI tasks. This can result in longer training times or even make certain models infeasible to use.

Furthermore, computational constraints also affect the scalability and efficiency of AI systems. As models become more complex or the amount of data being processed increases, the need for more powerful computing resources arises. Without adequate resources, scaling up becomes difficult or even impossible, leading to bottlenecks and inefficiencies.

Addressing these problems in AI is crucial for advancing the field. Finding innovative ways to optimize computation, reducing energy consumption, and making AI systems more scalable are ongoing challenges that developers and researchers are working on tirelessly. By overcoming these constraints, we can unlock the true potential of AI technology.

Ethical Concerns:

Bias and Discrimination:

Bias and discrimination are not just societal problems, but they can also manifest in AI systems. When AI algorithms are trained on biased data, they can accidentally perpetuate stereotypes and strengthen existing inequalities.

For example, if an AI system is trained on historical data that reflects societal biases, it may learn to associate specific demographics with negative traits or outcomes. This can lead to discriminatory outcomes when the system is used to make decisions in areas such as hiring, lending, or criminal justice.

One notable case of biased algorithms was seen in a study conducted by ProPublica. They found that a widely used algorithm for predicting future criminal behavior had a higher false positive rate for black defendants compared to white defendants. This meant that the algorithm was more likely to wrongly label black individuals as high-risk offenders, perpetuating racial disparities within the criminal justice system.

It’s essential to use diverse datasets during training to combat bias in AI systems and regularly audit them to identify discriminatory patterns. Regular audits can reduce the risk of reinforcing existing biases. If bias is detected, adjustments should be made to ensure fair outcomes. This requires a multi-faceted approach involving diverse datasets, rigorous testing methodologies, and ongoing vigilance to create more inclusive and unbiased AI systems that benefit everyone.

Privacy Violations Problems in Artificial Intelligence:

Privacy violations in the age of AI are a growing concern, and it’s essential to understand the risks offered by AI-enabled surveillance technologies and data collection practices. These advanced technologies have the ability to intrude into our personal lives in unprecedented ways.

We’ve seen cases where personal privacy has already been compromised by AI-driven systems. From facial recognition technologies that can track individuals without permission, to data collection practices that harvest personal information without transparency or control, there have been numerous breaches of privacy.

To address these problems, regulatory frameworks and ethical guidelines are being developed to protect individual privacy rights. Governments around the world are working on law that balances the benefits of AI with safeguarding individual privacy. Ethical guidelines are also being established by groups like the IEEE and ACM to ensure responsible use of AI technologies.

As we navigate this new era of artificial intelligence, it is crucial that we value privacy protection. By knowing the risks involved, advocating for stronger regulations, and adhering to ethical standards, we can work towards a future where AI is used responsibly while respecting individual privacy rights.

Autonomous Weapons and Job Displacement:

Autonomous weapons systems have sparked a heated discussion surrounding their ethical implications. The idea of machines making life-and-death decisions raises worries about the potential for indiscriminate harm. As these systems become more advanced and capable, there is a rising fear that they may not possess the necessary moral judgment to differentiate between combatants and civilians.

Alongside these ethical considerations, there is also a worry regarding job displacement caused by automation and AI-driven workforce transformations. As artificial intelligence continues to progress, many worry about its impact on employment opportunities. With machines being able to perform tasks once handled by people, there is a looming fear that certain jobs may become obsolete.

Finding policy solutions and establishing ethical frameworks becomes crucial in addressing these problems. It’s important to strike a balance that harnesses the benefits of autonomous technologies while minimizing harm and ensuring job security for workers affected by automation. These discussions will play a vital role in shaping regulations and guidelines that guide the responsible use of AI, eventually aiming for a future where technology enhances society rather than causing harm or inequality.

Safety and Security Risks:

Safety Concerns in AI Applications:

When it comes to AI applications, some inherent safety issues need to be addressed. The potential consequences of AI errors and failures in safety-critical areas can be quite important.

To understand the gravity of such situations, let’s take a look at some case studies where AI systems have made incorrect choices with real-world implications. From autonomous vehicles making fatal mistakes on the road to algorithmic biases leading to discriminatory outcomes, these examples serve as a reminder of the importance of careful testing and validation.

The robust testing, validation, and fair assessment of AI systems are crucial in mitigating possible problems. It is important to ensure that AI algorithms are thoroughly tested under various scenarios to identify any weaknesses or biases. Regular updates and improvements should be implemented based on constant evaluation.

While these problems in artificial intelligence may seem concerning, it’s important not to overlook the immense potential that AI holds for positive effect when used responsibly. By acknowledging and addressing these challenges head-on, we can aim for safer and more reliable AI technologies in the future.

Malicious Manipulation:

One concerning issue is adversarial attacks, where individuals abuse the weaknesses of AI algorithms to deceive or manipulate their outputs. These attacks can lead to severe effects across different domains. For instance, in autonomous cars, adversaries have been able to create misleading signs or images that trick the AI systems into misinterpreting them.

Another example of malicious abuse is the use of AI-generated deep fakes. These manipulated videos and audios can clearly make people appear to say or do things they never did. This technology presents significant risks in areas such as misinformation campaigns and identity theft.

To enhance the resilience and security of AI systems, it’s important to apply robust strategies. One method is building defenses against adversarial attacks by incorporating techniques like adversarial training, where AI models are trained using both normal and adversarial examples. Regularly updating models with new data is also crucial for keeping up with rising threats.

Furthermore, increasing transparency and overall ethics in the creation and deployment of AI systems can help address these problems. Encouraging responsible practices such as thorough testing, external audits, and open collaborations among researchers can mitigate possible risks.

While challenges remain in securing AI systems from malicious manipulation, constant vigilance coupled with innovative solutions will be key in ensuring a safer future for artificial intelligence technology.

The Human Factor:

Building Trust and Transparency:

Transparency and accountability play a crucial part in the AI decision-making processes. As AI becomes more integrated into our lives, it is important to understand how and why certain decisions are made. This understanding promotes trust and confidence in AI systems.

One of the problems in artificial intelligence is the lack of explainability and interpretability. Many AI systems make choices based on complex algorithms that are not easily understandable by humans. This opacity raises concerns about bias, unfairness, or even unintended effects.

To handle these concerns, strategies for fostering user trust and confidence include making AI systems more explainable and interpretable. By providing clear explanations of how decisions are made, users can better understand and trust the outcomes.

Additionally, user comments and engagement play a significant role in improving AI systems. When users have a say in shaping the technology they use, it improves transparency and ensures accountability from developers. Open channels for feedback allow users to voice their concerns, suggest improvements, or report any biases they might notice.

In summary, ensuring transparency and accountability in artificial intelligence decision-making processes is important for building trust with users. By focusing on explainability, interpretability, and actively engaging users through feedback mechanisms, we can foster a sense of reliability that benefits both people using AI systems and society as a whole.

Human Oversight and Control:

Human supervision and involvement are crucial when it comes to AI-driven decision-making processes. Artificial intelligence (AI) systems can analyze data and make predictions, but they are not perfect.

The incapacity of completely autonomous AI systems to handle confusing and complex circumstances is one of its drawbacks. These circumstances frequently call for human judgment, which is capable of accounting for subtleties, moral considerations, and contextual knowledge that AI might not be able to.

Interdisciplinary cooperation can help with that. Experts across a range of disciplines, including ethics, psychology, sociology, and AI technology, can be brought together to ensure that decisions made by AI systems are well-informed and consistent with societal values.

In addition to reducing the possibility of biases or mistakes in AI algorithms, human oversight enables us to solve complicated problems with greater knowledge and decision-making. It guarantees that we will be held accountable and responsible for the creation and application of AI technologies.

Even if AI has advantages and is developing quickly, it is critical to acknowledge the value of human interaction. Effective navigation of the intricacies of real-world settings by autonomous systems requires human judgment and interdisciplinary teamwork.

Conclusion

In conclusion, the field of Artificial Intelligence (AI) faces several challenges that need to be addressed for its responsible growth. Technical limitations, ethical concerns, safety risks, and the human factor are among the key obstacles.

Technically, AI still has limitations in terms of understanding context and generating coherent and contextually appropriate material. Ethical concerns arise when AI is used to spread misinformation or biased content. Safety risks include the possible misuse of AI for malicious purposes or cybersecurity breaches. Lastly, the human part cannot be overlooked as jobs may be impacted by the increasing automation of tasks.

However, there is ongoing study and dedicated attempts to mitigate these Problems in Artificial Intelligence. Advancements in Natural Language Processing (NLP) and machine learning algorithms aim to enhance AI’s understanding of context and improve its ability to produce high-quality content. Ethical guidelines are being created to ensure responsible use of AI in content creation. Additionally, cybersecurity measures are continuously evolving to address safety risks linked with AI technologies.

Despite these Problems in Artificial Intelligence, there is reason for optimism. Through responsible development practices and a focus on ethics, AI has the potential to revolutionize our world favorably. It can augment human capabilities, streamline processes, and eventually add to progress in various fields.

I encourage you to stay updated about ongoing developments in AI research while participating in discussions surrounding its effects on society and job markets. By actively engaging with this topic, we can collectively build a future where AI benefits us all while minimizing any possible negative impacts.